我想使用PyArrow将以下熊猫数据帧存储在拼花文件中:

import pandas as pd

df = pd.DataFrame({'field': [[{}, {}]]})

字段列的类型是字典列表:

field

0 [{}, {}]

我首先定义相应的PyArrow模式:

import pyarrow as pa

schema = pa.schema([pa.field('field', pa.list_(pa.struct([])))])

然后我使用from_pandas():

table = pa.Table.from_pandas(df, schema=schema, preserve_index=False)

这会引发以下异常:

Traceback (most recent call last):

File "<stdin>", line 1, in <module>

File "table.pxi", line 930, in pyarrow.lib.Table.from_pandas

File "/anaconda3/lib/python3.6/site-packages/pyarrow/pandas_compat.py", line 371, in dataframe_to_arrays

convert_types)]

File "/anaconda3/lib/python3.6/site-packages/pyarrow/pandas_compat.py", line 370, in <listcomp>

for c, t in zip(columns_to_convert,

File "/anaconda3/lib/python3.6/site-packages/pyarrow/pandas_compat.py", line 366, in convert_column

return pa.array(col, from_pandas=True, type=ty)

File "array.pxi", line 177, in pyarrow.lib.array

File "error.pxi", line 77, in pyarrow.lib.check_status

File "error.pxi", line 87, in pyarrow.lib.check_status

pyarrow.lib.ArrowTypeError: Unknown list item type: struct<>

我做错了什么,还是PyArrow不支持?

我使用pyarrow0.9.0,熊猫23.4,python 3.6。

根据这个Jira问题,在2.0.0版中实现了读取和写入结构和列表嵌套级别混合的嵌套Parquet数据。

以下示例通过往返演示实现的功能:熊猫数据帧-

初始熊猫数据帧有一个类型列表文件和一个条目:

field

0 [{'a': 1}, {'a': 2}]

示例代码:

import pandas as pd

import pyarrow as pa

import pyarrow.parquet

df = pd.DataFrame({'field': [[{'a': 1}, {'a': 2}]]})

schema = pa.schema(

[pa.field('field', pa.list_(pa.struct([('a', pa.int64())])))])

table_write = pa.Table.from_pandas(df, schema=schema, preserve_index=False)

pyarrow.parquet.write_table(table_write, 'test.parquet')

table_read = pyarrow.parquet.read_table('test.parquet')

table_read.to_pandas()

输出数据帧与输入数据帧相同,因为它应该是:

field

0 [{'a': 1}, {'a': 2}]

以下是重现此bug的片段:

#!/usr/bin/env python3

import pandas as pd # type: ignore

def main():

"""Main function"""

df = pd.DataFrame()

df["nested"] = [[dict()] for i in range(10)]

df.to_feather("test.feather")

print("Success once")

df = pd.read_feather("test.feather")

df.to_feather("test.feather")

if __name__ == "__main__":

main()

请注意,从熊猫到羽毛,没有任何东西会损坏,但是一旦数据帧从羽毛加载并尝试写回它,它就会损坏。

要解决这个问题,只需更新到pyarrow2.0.0:

pip3 install pyarrow==2.0.0

截至2020-11-16的可用py箭头版本:

0.9.0, 0.10.0, 0.11.0, 0.11.1, 0.12.0, 0.12.1, 0.13.0, 0.14.0, 0.15.1, 0.16.0, 0.17.0, 0.17.1, 1.0.0, 1.0.1, 2.0.0

我已经能够将具有数组列的熊猫数据帧保存为parquet,并通过将对象的数据帧dtype转换为str从parquet读取回数据帧。

def mapTypes(x):

return {'object': 'str', 'int64': 'int64', 'float64': 'float64', 'bool': 'bool',

'datetime64[ns, ' + timezone + ']': 'datetime64[ns, ' + timezone + ']'}.get(x,"str") # string is default if type not mapped

table_names = [x for x in df.columns]

table_types = [mapTypes(x.name) for x in df.dtypes]

parquet_table = dict(zip(table_names, table_types))

df_pq = df.astype(parquet_table)

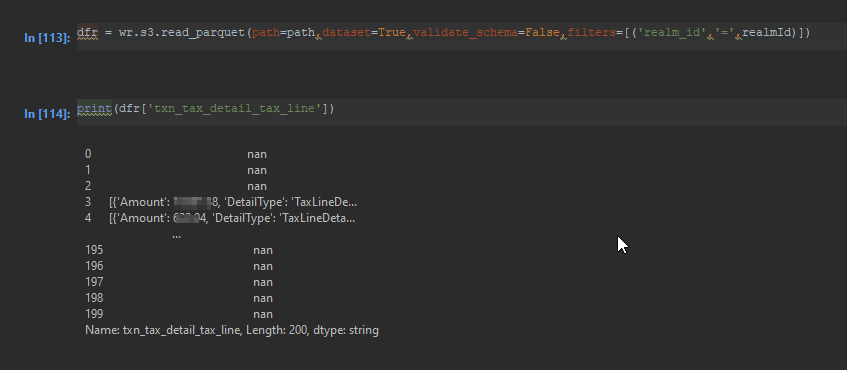

import awswrangler as wr

wr. s 3.to_parquet(df=df_pq,path=path,data set=True,数据库='test',mode='overwrite',table=table.low(),partition_cols=['real mid'],sanitize_columns=True)