使用这个答案来创建一个分割程序,它不正确地计算了对象。我注意到单独的对象被忽略或成像采集不良。

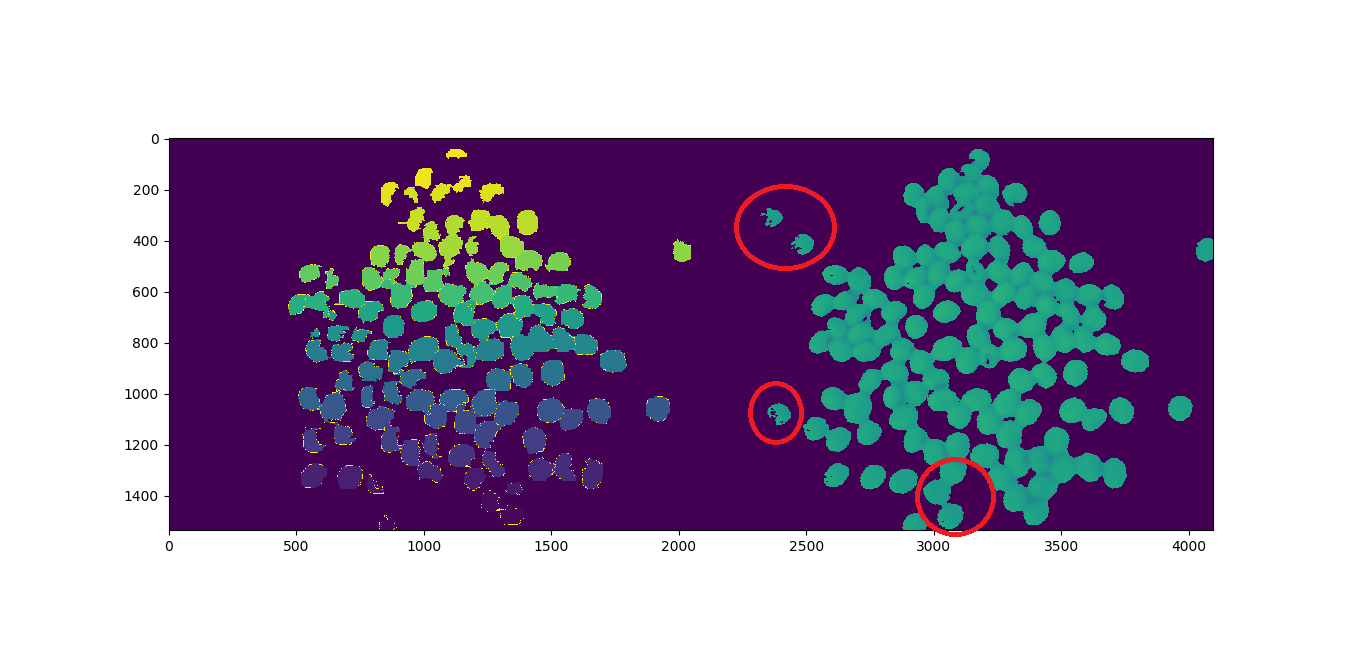

我计算了123个对象,程序返回117个,可以看出,如下所示。用红色圈出的对象似乎不见了:

使用来自720p网络摄像头的以下图像:

import cv2

import numpy as np

import matplotlib.pyplot as plt

from scipy.ndimage import label

import urllib.request

# https://stackoverflow.com/a/14617359/7690982

def segment_on_dt(a, img):

border = cv2.dilate(img, None, iterations=5)

border = border - cv2.erode(border, None)

dt = cv2.distanceTransform(img, cv2.DIST_L2, 3)

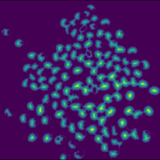

plt.imshow(dt)

plt.show()

dt = ((dt - dt.min()) / (dt.max() - dt.min()) * 255).astype(np.uint8)

_, dt = cv2.threshold(dt, 140, 255, cv2.THRESH_BINARY)

lbl, ncc = label(dt)

lbl = lbl * (255 / (ncc + 1))

# Completing the markers now.

lbl[border == 255] = 255

lbl = lbl.astype(np.int32)

cv2.watershed(a, lbl)

print("[INFO] {} unique segments found".format(len(np.unique(lbl)) - 1))

lbl[lbl == -1] = 0

lbl = lbl.astype(np.uint8)

return 255 - lbl

# Open Image

resp = urllib.request.urlopen("https://i.stack.imgur.com/YUgob.jpg")

img = np.asarray(bytearray(resp.read()), dtype="uint8")

img = cv2.imdecode(img, cv2.IMREAD_COLOR)

## Yellow slicer

mask = cv2.inRange(img, (0, 0, 0), (55, 255, 255))

imask = mask > 0

slicer = np.zeros_like(img, np.uint8)

slicer[imask] = img[imask]

# Image Binarization

img_gray = cv2.cvtColor(slicer, cv2.COLOR_BGR2GRAY)

_, img_bin = cv2.threshold(img_gray, 140, 255,

cv2.THRESH_BINARY)

# Morphological Gradient

img_bin = cv2.morphologyEx(img_bin, cv2.MORPH_OPEN,

np.ones((3, 3), dtype=int))

# Segmentation

result = segment_on_dt(img, img_bin)

plt.imshow(np.hstack([result, img_gray]), cmap='Set3')

plt.show()

# Final Picture

result[result != 255] = 0

result = cv2.dilate(result, None)

img[result == 255] = (0, 0, 255)

plt.imshow(result)

plt.show()

如何统计丢失的对象?

回答您的主要问题,分水岭不会删除单个对象。分水岭在您的算法中运行良好。它接收预定义的标签并相应地执行分割。

问题是您为距离变换设置的阈值太高,它消除了单个对象的弱信号,从而阻止了对象被标记并发送到分水岭算法。

距离变换信号弱的原因是颜色分割阶段分割不当,难以设置单一阈值去除噪声和提取信号。

为了解决这个问题,我们需要执行适当的颜色分割,并在分割距离变换信号时使用自适应阈值而不是单个阈值。

这是我修改的代码。我在代码中结合了@user1269942的颜色分割方法。额外的解释在代码中。

import cv2

import numpy as np

import matplotlib.pyplot as plt

from scipy.ndimage import label

import urllib.request

# https://stackoverflow.com/a/14617359/7690982

def segment_on_dt(a, img, img_gray):

# Added several elliptical structuring element for better morphology process

struct_big = cv2.getStructuringElement(cv2.MORPH_ELLIPSE,(5,5))

struct_small = cv2.getStructuringElement(cv2.MORPH_ELLIPSE,(3,3))

# increase border size

border = cv2.dilate(img, struct_big, iterations=5)

border = border - cv2.erode(img, struct_small)

dt = cv2.distanceTransform(img, cv2.DIST_L2, 3)

dt = ((dt - dt.min()) / (dt.max() - dt.min()) * 255).astype(np.uint8)

# blur the signal lighty to remove noise

dt = cv2.GaussianBlur(dt,(7,7),-1)

# Adaptive threshold to extract local maxima of distance trasnform signal

dt = cv2.adaptiveThreshold(dt, 255, cv2.ADAPTIVE_THRESH_GAUSSIAN_C, cv2.THRESH_BINARY, 21, -9)

#_ , dt = cv2.threshold(dt, 2, 255, cv2.THRESH_BINARY)

# Morphology operation to clean the thresholded signal

dt = cv2.erode(dt,struct_small,iterations = 1)

dt = cv2.dilate(dt,struct_big,iterations = 10)

plt.imshow(dt)

plt.show()

# Labeling

lbl, ncc = label(dt)

lbl = lbl * (255 / (ncc + 1))

# Completing the markers now.

lbl[border == 255] = 255

plt.imshow(lbl)

plt.show()

lbl = lbl.astype(np.int32)

cv2.watershed(a, lbl)

print("[INFO] {} unique segments found".format(len(np.unique(lbl)) - 1))

lbl[lbl == -1] = 0

lbl = lbl.astype(np.uint8)

return 255 - lbl

# Open Image

resp = urllib.request.urlopen("https://i.stack.imgur.com/YUgob.jpg")

img = np.asarray(bytearray(resp.read()), dtype="uint8")

img = cv2.imdecode(img, cv2.IMREAD_COLOR)

## Yellow slicer

# blur to remove noise

img = cv2.blur(img, (9,9))

# proper color segmentation

hsv = cv2.cvtColor(img, cv2.COLOR_BGR2HSV)

mask = cv2.inRange(hsv, (0, 140, 160), (35, 255, 255))

#mask = cv2.inRange(img, (0, 0, 0), (55, 255, 255))

imask = mask > 0

slicer = np.zeros_like(img, np.uint8)

slicer[imask] = img[imask]

# Image Binarization

img_gray = cv2.cvtColor(slicer, cv2.COLOR_BGR2GRAY)

_, img_bin = cv2.threshold(img_gray, 140, 255,

cv2.THRESH_BINARY)

plt.imshow(img_bin)

plt.show()

# Morphological Gradient

# added

cv2.morphologyEx(img_bin, cv2.MORPH_OPEN,cv2.getStructuringElement(cv2.MORPH_ELLIPSE,(3,3)),img_bin,(-1,-1),10)

cv2.morphologyEx(img_bin, cv2.MORPH_ERODE,cv2.getStructuringElement(cv2.MORPH_ELLIPSE,(3,3)),img_bin,(-1,-1),3)

plt.imshow(img_bin)

plt.show()

# Segmentation

result = segment_on_dt(img, img_bin, img_gray)

plt.imshow(np.hstack([result, img_gray]), cmap='Set3')

plt.show()

# Final Picture

result[result != 255] = 0

result = cv2.dilate(result, None)

img[result == 255] = (0, 0, 255)

plt.imshow(result)

plt.show()

最终结果:找到124个独特的项目。发现了一个额外的项目,因为其中一个对象被分成2。通过适当的参数调整,你可能会得到你正在寻找的确切数字。但是我建议买一个更好的相机。

看看你的代码,它是完全合理的,所以我只想提出一个小建议,那就是使用HSV颜色空间来做你的“inRange”。

关于颜色空间的OpenCV文档:

https://opencv-python-tutroals.readthedocs.io/en/latest/py_tutorials/py_imgproc/py_colorspaces/py_colorspaces.html

另一个将inRange与HSV一起使用的SO示例:

如何检测两种不同的颜色使用'cv2. inRange'在Python-OpenCV?

并为您进行小代码编辑:

img = cv2.blur(img, (5,5)) #new addition just before "##yellow slicer"

## Yellow slicer

#mask = cv2.inRange(img, (0, 0, 0), (55, 255, 255)) #your line: comment out.

hsv = cv2.cvtColor(img, cv2.COLOR_BGR2HSV) #new addition...convert to hsv

mask = cv2.inRange(hsv, (0, 120, 120), (35, 255, 255)) #new addition use hsv for inRange and an adjustment to the values.

检测丢失的物体

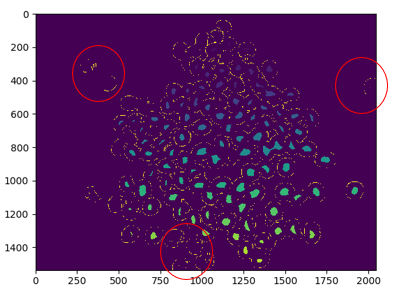

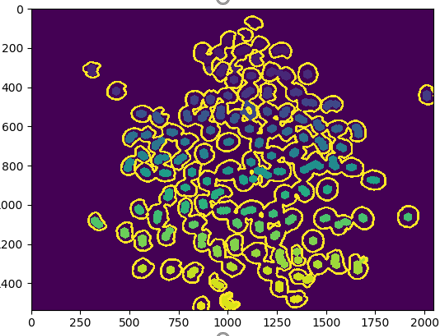

im_1im_2im_3

我数了12个丢失的物体:2、7、8、11、65、77、78、84、92、95、96。编辑:85也是

发现117个,失踪12个,错6个

1°尝试:降低面具敏感度

#mask = cv2.inRange(img, (0, 0, 0), (55, 255, 255)) #Current

mask = cv2.inRange(img, (0, 0, 0), (80, 255, 255)) #1' Attempt

inRange文档

im_4im_5im_6im_7

[INFO] 120 unique segments found

找到120,少9,错6