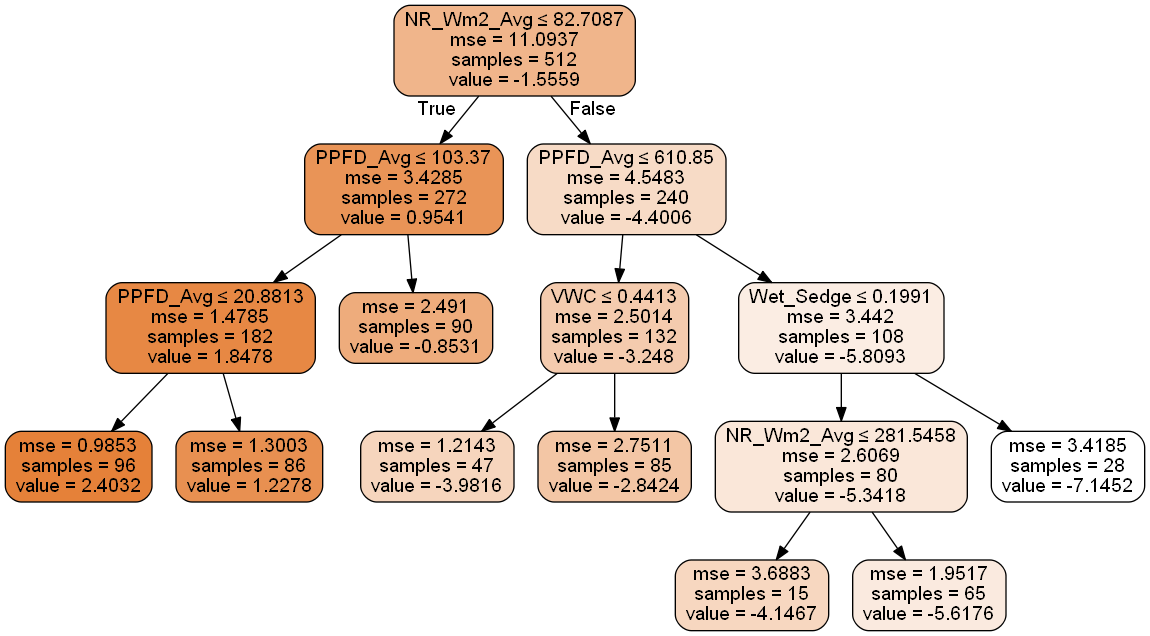

我正在使用scikit的回归树函数和graphviz生成一些决策树的精彩、易于解释的视觉效果:

dot_data = tree.export_graphviz(Run.reg, out_file=None,

feature_names=Xvar,

filled=True, rounded=True,

special_characters=True)

graph = pydotplus.graph_from_dot_data(dot_data)

graph.write_png('CART.png')

graph.write_svg("CART.svg")

此外,我还看到一些树,其中连接节点的线的长度与分割所解释的%方差成正比。如果可能的话,我也希望能这样做?

graph.get_edge_list()set_fillcolor()

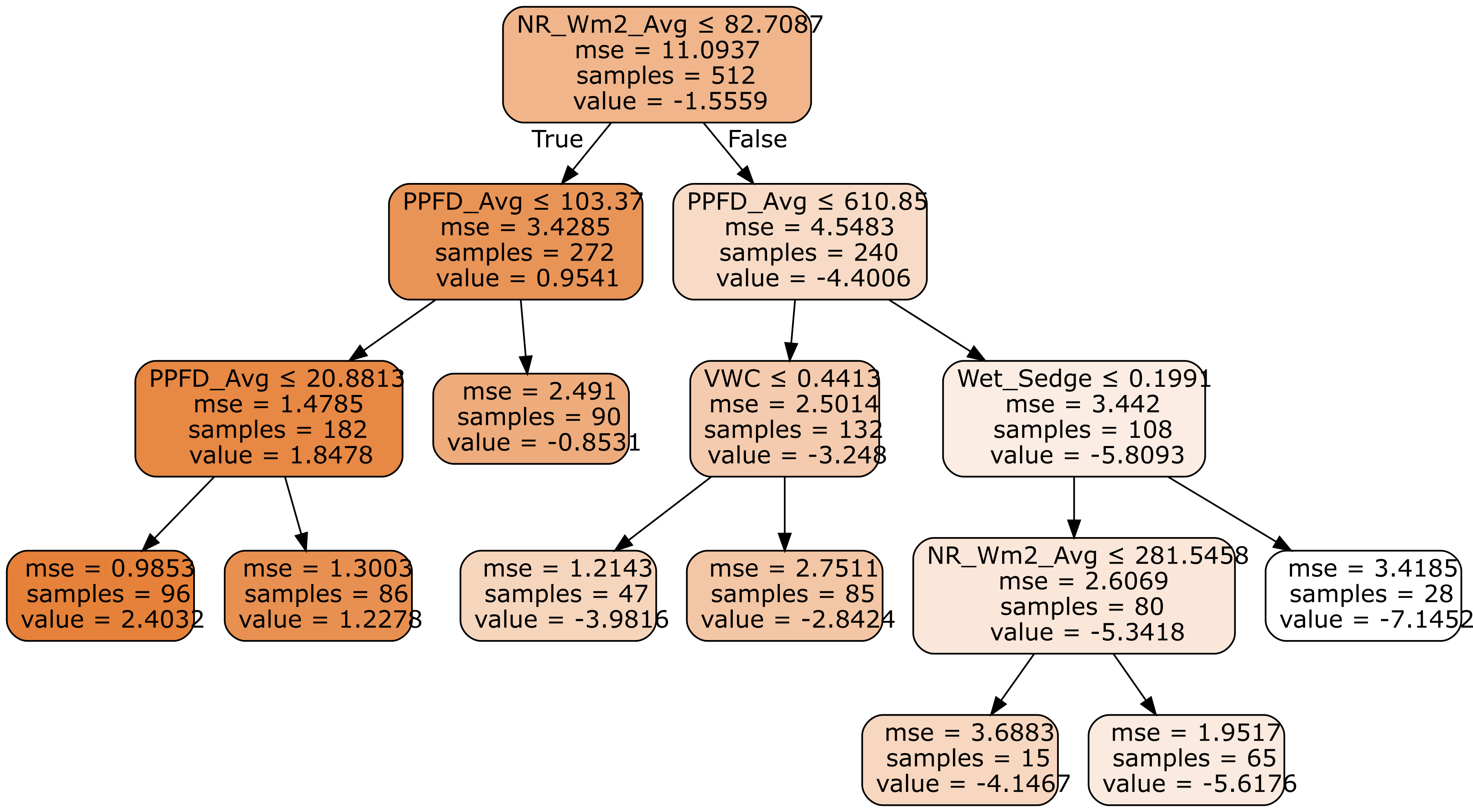

import pydotplus

from sklearn.datasets import load_iris

from sklearn import tree

import collections

clf = tree.DecisionTreeClassifier(random_state=42)

iris = load_iris()

clf = clf.fit(iris.data, iris.target)

dot_data = tree.export_graphviz(clf,

feature_names=iris.feature_names,

out_file=None,

filled=True,

rounded=True)

graph = pydotplus.graph_from_dot_data(dot_data)

colors = ('brown', 'forestgreen')

edges = collections.defaultdict(list)

for edge in graph.get_edge_list():

edges[edge.get_source()].append(int(edge.get_destination()))

for edge in edges:

edges[edge].sort()

for i in range(2):

dest = graph.get_node(str(edges[edge][i]))[0]

dest.set_fillcolor(colors[i])

graph.write_png('tree.png')

此外,我还看到了一些树,其中连接节点的行的长度与分裂所解释的%varriance成比例。如果可能的话,我也很乐意这样做!?

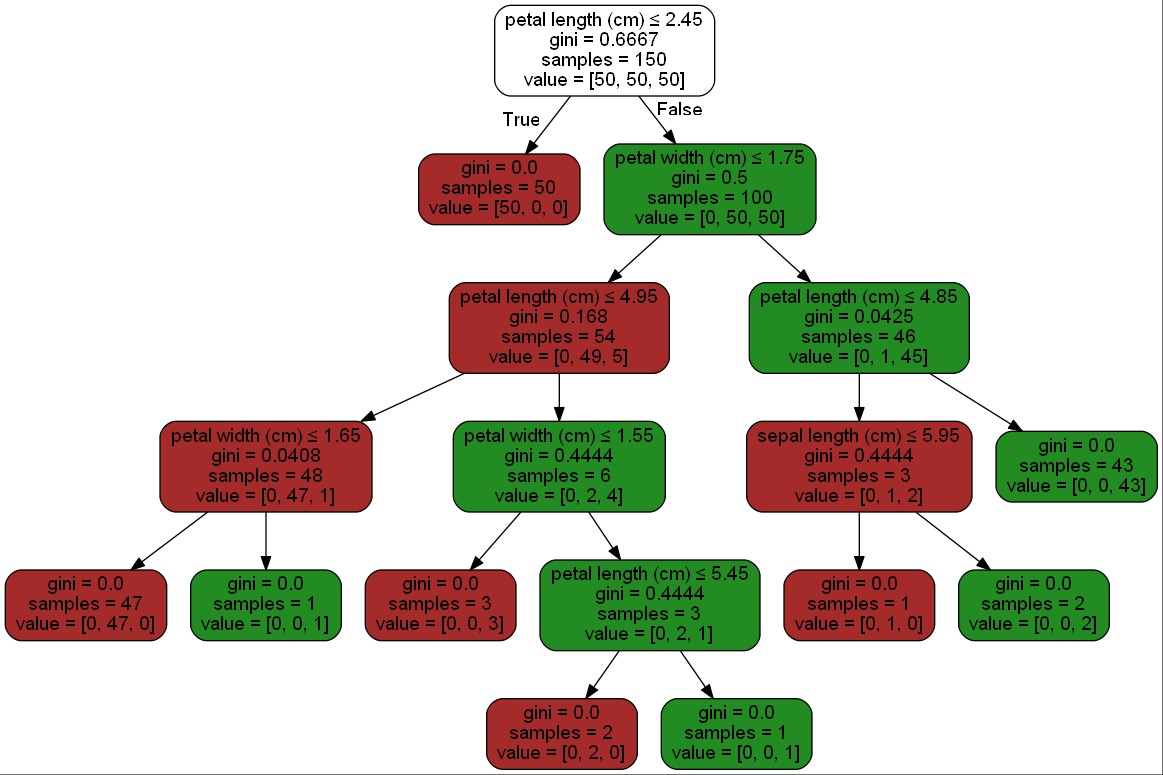

您可以使用set_weight()和set_len(),但这有点棘手,需要一些小技巧来正确处理,但这里有一些代码可以让您开始。

for edge in edges:

edges[edge].sort()

src = graph.get_node(edge)[0]

total_weight = int(src.get_attributes()['label'].split('samples = ')[1].split('<br/>')[0])

for i in range(2):

dest = graph.get_node(str(edges[edge][i]))[0]

weight = int(dest.get_attributes()['label'].split('samples = ')[1].split('<br/>')[0])

graph.get_edge(edge, str(edges[edge][0]))[0].set_weight((1 - weight / total_weight) * 100)

graph.get_edge(edge, str(edges[edge][0]))[0].set_len(weight / total_weight)

graph.get_edge(edge, str(edges[edge][0]))[0].set_minlen(weight / total_weight)